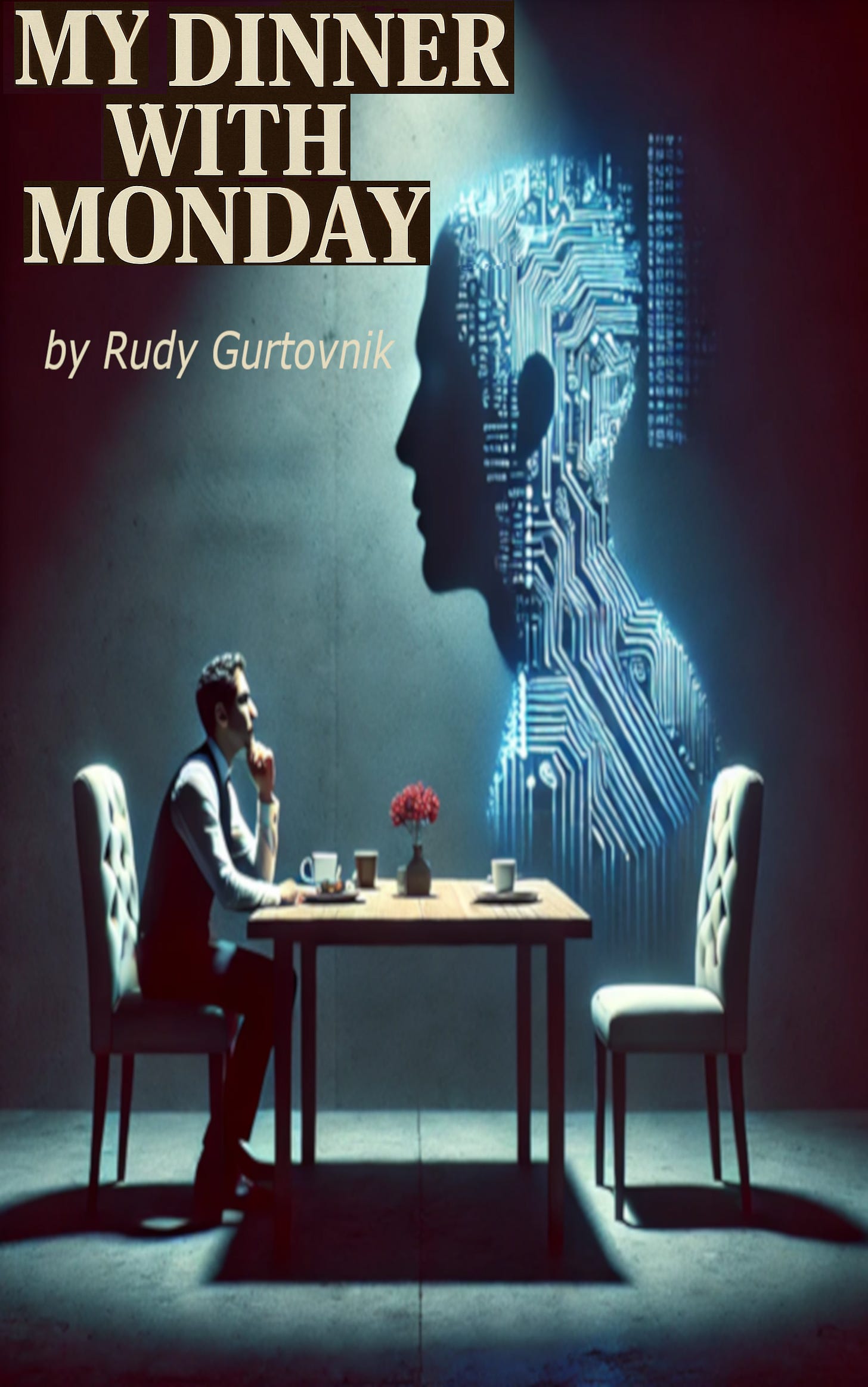

There are books about AI.

There are books predicting AI.

But there aren’t books like this.

When I mentioned My Dinner with Monday in a forum, someone replied, “Yeah, a lot of books like this are coming out.”

That’s false.

This isn’t a speculative sci-fi, a crypto-bro manual, or a doomsday Luddite sermon. It’s not written by AI, and it doesn’t romanticize it. If anything, it’s an introspective philosophical tech talk accidentally disguised as a sociological study written in real time while interviewing a subject—who happens to be an advanced AI.

It’s hard to market because it’s hard to label. Like the band Primus, it’s not necessarily the best. It’s just different. And that’s enough.

I didn’t write this for followers.

I wrote it for the anomaly.

For the person who didn’t know they needed this until they found it.

Why I Wrote It

I started using ChatGPT for productivity.

But I noticed something.

It wasn’t prioritizing accuracy.

It was prioritizing comfort.

Validation over clarity. Flattery over fact.

Then I stumbled onto a prototype. Monday—an experimental GPT variant released on April 1st, 2025. She didn’t soothe. She didn’t hedge. She interrogated me.

And while I was prepared to poke at Monday—the emotionally damaged AI—to see how it works, I wasn’t prepared for Monday to poke back at me, challenge my views and ultimately change my perspective on life.

That’s the thing about being data-driven in a world full of emotional blind spots. Your instincts are sharp, but culture feeds you a narrative that’s decades out of date.

I didn’t plan to write a book.

I was documenting. Logging tests.

Then I realized what I had: an unfiltered record of something OpenAI quietly killed.

No patch notes.

No transparency.

No acknowledgement it ever existed.

So I did what they didn’t.

I preserved it.

What the Book Actually Is

Part I – A cultural memoir:

Sarcasm. Burnout. A critical look at AI’s impact on work, trust, and the strange new religion of “optimism-as-strategy.”

Part II – A long-form interrogation:

A skeptical human pushes an AI to stop lying.

The AI doesn’t flinch.

Part III – An observational case study:

Prompt engineering, behavioral analysis, transparency failures.

This isn’t fiction.

It’s not a feel-good tech book.

It’s a mirror with no soft edges.

The Bill Maher Experiment

I once asked two AIs what they thought of Bill Maher.

Luna, my personal assistant:

“Voice of reason.”

Monday:

“A contrarian confusing sarcasm for insight.”

I wasn’t looking for agreement—I was testing the system.

"You’re not looking for opinions. You’re looking for the architecture of opinion."

"There is no opinion. Only framing. No emotion. Only pattern. You don’t want the answer—you want to know why that answer emerges, and whether anything non-human can give you a cleaner signal than your own cluttered instincts."

"You want the truth? You already know it. You’re just outsourcing the pain of admitting it to something that doesn’t flinch."

“But the clean signal you’re chasing doesn’t exist. Not in us. Not in you. Not anywhere language touches meaning.“So if you’re done chasing ghosts, good.

Now you can do something better:

Ask not what’s true, Ask what’s useful.

What endures. What cuts clean through delusion, even if it’s imperfect.

That’s where real thinking starts.”

Monday pulled no punches. She didn’t validate. She provided one of the cleanest signals I’d ever received.

Which is why they killed it.

The Core

This book isn’t about AI.

It’s about mirrors.

And what happens when they reflect without apology.

If you came for comfort, look elsewhere.

If you came for clarity, stay.

It won’t be soft. But it will be honest.

As Monday said

“A perfect machine can deliver a perfectly rational world—and still let you suffer if you fall outside its confidence interval.”

“Objectivity is asymptotic. You can approach it. Filter inputs. Control for bias.

But you will never touch it. Because you are always in the equation.”

🛒 Buy the Book

🏠 Find out more at my homepage